Sentiment Voice

As a continuation of my work on my senior capstone, I was hired to decrease latency of machine-learning models & nature language processing as well as adapt the implementation for a live VR performance.

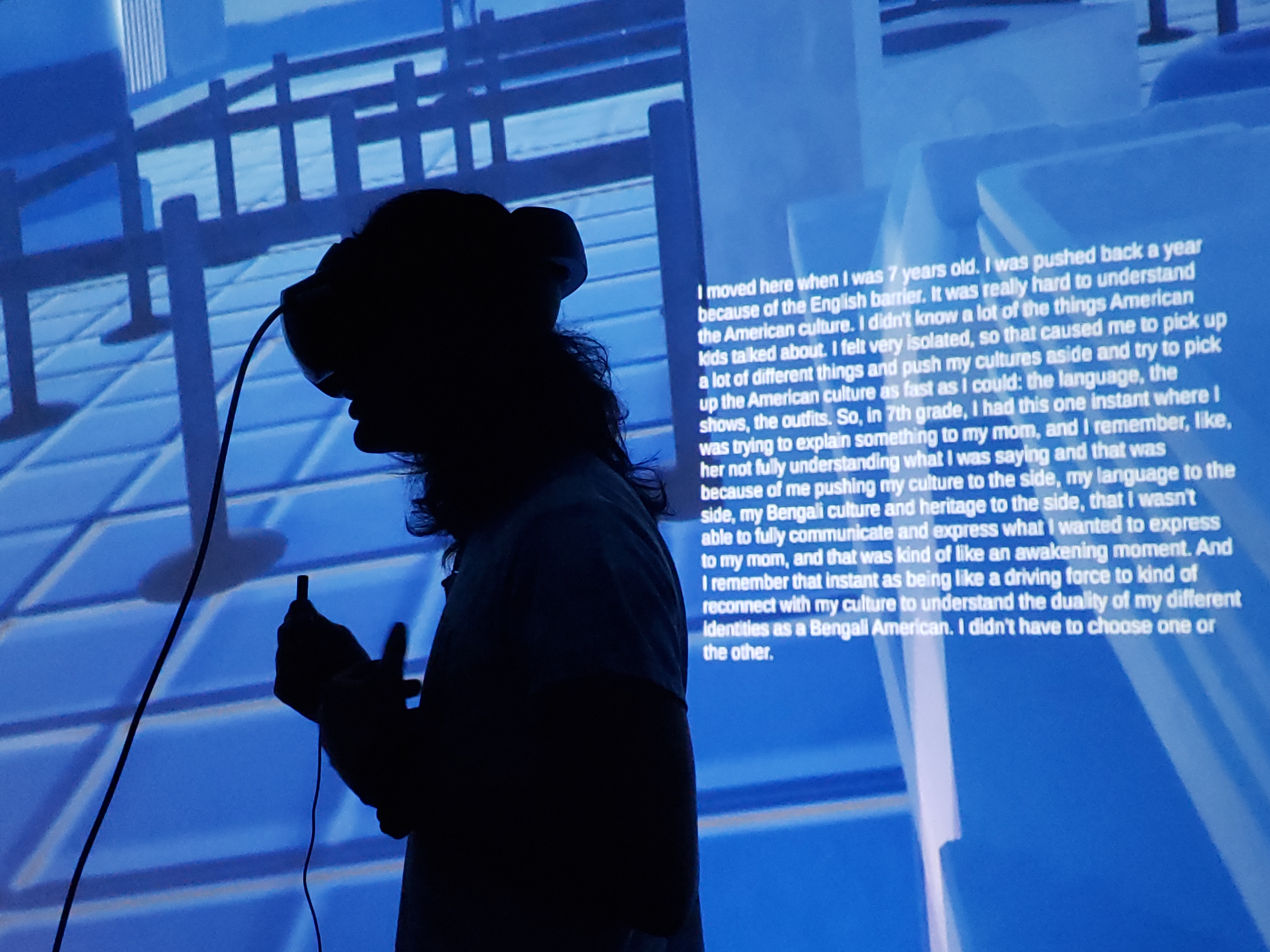

We sucessfully reduced emotional facial recognition times from on average 2s to 0.3ms by moving the model from an external api to on the actual device (vr headset). My collaborator, Miles Popiela, and I also implemented a more verbose speech to text system that improved speech recognition significantly. We were able to use these advancements for a real time performance with two actors who walked through an adaptive environment changing based off the emotions of speech and face. The process will also be presented in engineering, new media, electronic arts and VR conferences, such as ACM, IEEE, ISEA (International Symposium of Electronic Arts), Siggraph XR, CAA (College Art Association), A2RU (The Alliance for the Arts in Research Universities), Ars Electronica, International Conference on Machine Learning (ICML), and the International Conference in Computer Science (ICCS).

Technologies: Unity, Python, Machine-Learning

Timeline: 01/2024-08/2024